A Robot.txt file is an extremely powerful tool, used by Search Engines, Webmasters, and website owners alike, to connect with you and your site. It can help you to tell the Search Engines (both Google and Bing) how to crawl your site, or stop them from crawling it at all! This article by experts of a leading Digital Marketing Agency in Mumbai will help you understand what a Robot.txt file is, how to create one, and use it to both controls how your site is crawled and prevent yourself from being penalized.

Table:

- What is a robots.txt file?

- Do you need a Robots.txt file?

- How does the Robots.txt file work?

- How to create a Robots.txt file?

- How do you check if your Robots.txt is working?

- Submit a robots.txt file to Google

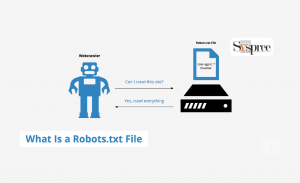

What is a robots.txt file?

A Robots.txt file is a plain text file placed on the root folder of your website that controls how search engines crawl and index specific pages of your site.

What is a robots.txt file?

The robots.txt file is used as a way to communicate with web crawlers and bots about how they should act on your site. It tells the crawler that certain pages shouldn’t be indexed and shouldn’t be crawled. It’s a nice way to keep parts of your website private and not expose them to search engines until you’re ready for them to be seen.

Do you need a Robots.txt file?

A Robot.txt file sometimes referred to as a “robots exclusion standard,” is a simple text file placed in the root folder of your website that allows you to communicate with search engines about which pages on your site you don’t want them to index.

Telling search engines not to index a page can be beneficial for both you and your visitors. If a page isn’t relevant to what someone searched for, then it’s best not to show it.

For example, if you have a page that doesn’t provide any information that would help visitors locate products they’re looking for, then you might want to prevent search engines from indexing this particular page. You can use the robots.txt file as a way to prevent search engines from indexing that page.

You want to protect sensitive information on your site, like passwords or customer information. Search engines will always obey the rules in the robots.txt file, so you can instruct them not to include particular pages in search results until those pages are finished and ready for public consumption.

You may also want to prevent search engines from indexing pages that allow people to post comments or reviews so as not to dilute the value of the most authoritative pages on your site with spammy content or duplicate information.

How does the Robots.txt file work?

How does the Robots.txt file work?

The robots.txt file is an instruction sheet for search engine robots. It tells them which parts of your site they can access and which pages they should not visit.

The robots.txt file is a text document that you place in the root directory of your website. Most of the important search engines, such as Google, Yahoo, and Bing, honour this request by not visiting the pages listed in the robots.txt file.

The primary purpose of a robots.txt file is to prevent the search engines from accessing files that are irrelevant to their search algorithms or their web crawlers. For example, if you have a page on your site that contains pictures of kittens playing with yarn, you might not want the search engines to index those images so that people won’t have to wade through them when they do an image search for “adorable cats.”

So how does it work?

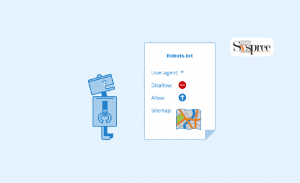

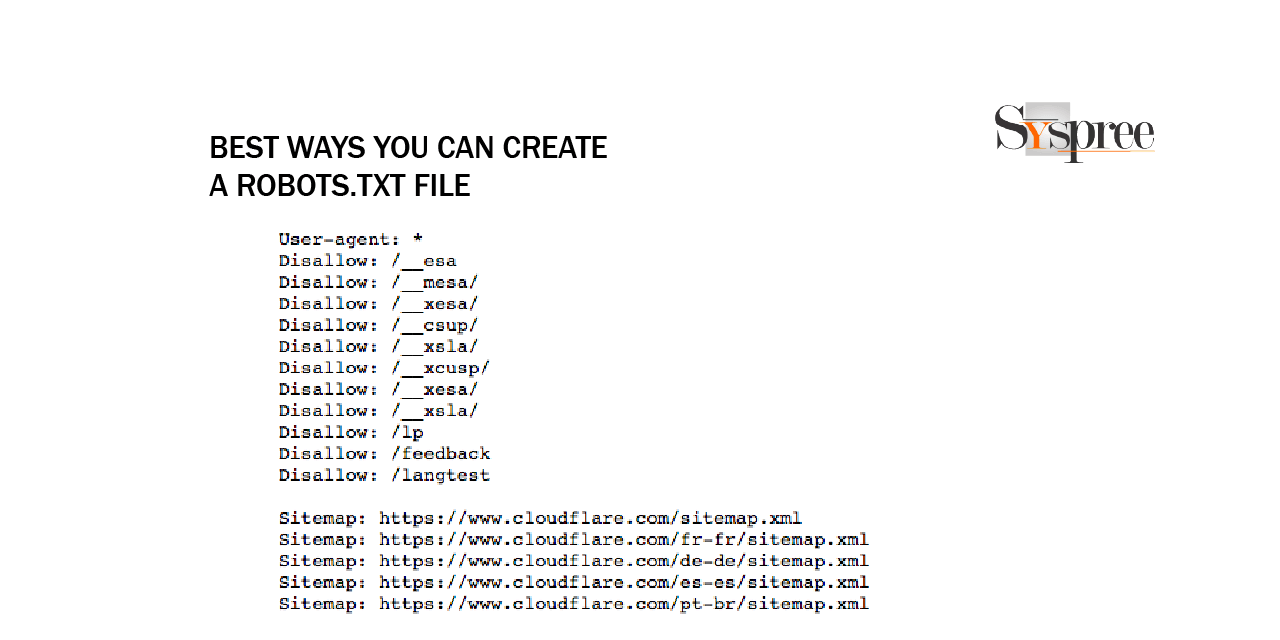

A typical robots.txt file contains three simple rules:

User-agent: *

Disallow: /private_files/

Disallow: /link_to_private_files/

The first line states that the entire site is disallowed for crawlers (user-agent). The second line states that the specified URL is disallowed (“private files” and “link_to_private_files” in this example). The third line indicates that any URLs that are preceded by “http://www”, are also disallowed (“http://www” in this example).

Another Example:-

If you don’t want to be crawled by Googlebot, then you would add the following line:

Googlebot:

Disallow: /private/*

The following example is for a site that doesn’t want to be crawled by anyone:

User-agent: *

Disallow: /

Experts of the leading SEO Company in Mumbai will tell you that if you have already set up a Robots.txt file and are not happy with the results, then you can tweak your existing Robot.txt file to get different results. If Google asks you to remove certain links from your website, the Robots.txt file is the one they are referring to. The Robots.txt file allows webmasters to remove links to a specific site or page from Google’s index without affecting the content and appearance of the rest of the site.

How to create a Robots.txt file?

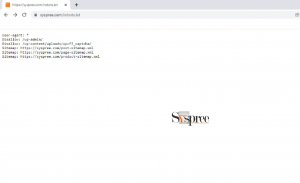

A Robots Exclusion Standard file, also known as a robots.txt file, determines which pages and content search engine crawlers should not index on your website. You can use a robots.txt file to keep your content private or allow other sites to look at it. A robots.txt file resides at the root directory of your website. So for example, for the website www.example.com, the robots. txt file lives at www . example. com / robots . txt. A default robot. txt file makes all files publicly available. However, you can make files private using robots. txt file by specifying crawl – delay preventing their accessibility in the first place.

Here’s a breakdown of what that robots.txt file means:

- This file tells Googlebot to ignore URLs beginning with http://example.com/nogooglebot/.

- All other user agents are free to crawl the entire site.

- This site’s sitemap is located at http://www.example.com/sitemap.xml.

Here are some basic guidelines for creating a robots.txt file.

Creating a robots.txt file is a simple five-step process:

- Make a file called robots.txt.

- Add rules to the robots.txt file to control search engine crawlers.

- Upload the file named robots.txt to your website.

- Test your robots.txt file.

- Submit a robots.txt file to Google

How to create a robots.txt file?

How to create a robots.txt file?

To create a robots.txt file, you can use any text editor (such as Notepad, TextEdit, vi, or emacs). Do not use a word processor to create your robots.txt file, as word processors often save files in non-web-standard formats that web crawlers can have trouble processing. When prompted during the save file dialogue, make sure to save the file with UTF-8 encoding.

Rules regarding the formatting and location of your Robot.txt file:

- A file named robots.txt must reside in your root directory to instruct search engines about which pages on your site should be accessible to web crawlers and which should not.

- To control crawling on all URLs in that website, create a robots.txt file at the root level of www.example.com/. To keep search engines from indexing all URLs below www.example.com/, create a text file named robots.txt and place it in the root directory of your website at www.example.com/. You cannot put this file in a subdirectory. If you’re having trouble accessing your website root directory, or need permissions to do so, contact your web hosting service provider for assistance. Visitors cannot access your website’s root directory? If so, use alternative blocking mechanisms such as meta tags.

- You can tell search engines what to do with your site by setting a robots.txt file on a subdomain (for example, https://website.example.com/robots.txt) or a non-standard port (for example, http://example.com:8181/robots.txt).

- For a robots.txt file to work correctly, it must be encoded as UTF-8 text (this includes ASCII characters). Google and other search engines might ignore characters that are not part of the UTF-8 range, which could cause errors in your ruleset.

Add rules to the robots.txt file to control search engine crawlers.

Rules in a robots.txt file instruct robots about the parts of your site they can or cannot crawl. Follow the tips below when working with your robots.txt file.

- Each robots.txt file consists of one or more groups, which each contain User-agent lines to specify the intended target of the directives contained within that group.

- If you have a website (www.example.com) and employees-only files on that server, you can create a .txt file called robots.txt to instruct spiders about which directories to visit or ignore. That way, you can control access without relying on the browser to do it for you.

- Crawlers read your file in order by line. The user agent matches the first rule that it finds that applies to that particular crawler.

- It’s safe to assume that if a file or directory is not blocked by a robot.txt file, then search engine crawlers can discover it.

- Rules are case-sensitive. For instance, disallow: /file.asp does not apply to https://www.example.com/file.asp and https://www.example.com/FILE.asp.

- Comments are helpful to anyone trying to interpret a robot.txt file.

Google crawlers support the directives in the robots.txt files.

- User-agent

- Disallow

- Allow

- Sitemap

Upload the file named robots.txt to your website

You can work with your web host to make changes to your robots.txt file. Your host can help you upload the file or provide you with specific instructions. Check your account’s documentation or contact the host directly; for example, search for “infomania files upload.”

Test whether your robots.txt is viewable to Google and the world and if it’s parseable after you upload it.

How do you check if your Robots.txt is working?

How do you check if your Robots.txt is working?

Here’s how to see if your robots.txt file is working: Open a private browsing window (or browser) and navigate to the location of the robots.txt file. For example, https://example.com/robots.txt. If you see the contents of your robots.txt file in your browser, you’re ready to test the markup.

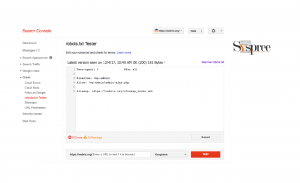

Google offers two options for robots.txt testing:

- The txt Tester in Search Console is only available to view files that are already accessible on your site.

- Check out and build Google’s open-source robots.txt library. This tool can help you test robots.txt files locally on your computer.

Submit a robots.txt file to Google

After you upload and test your robots.txt file, Google’s crawlers can automatically discover it and start using it. If you update your robots.txt file and want to refresh Google’s cached copy as quickly as possible, Now let’s see how to submit an updated robots.txt file.

If you need to update your robots.txt file, download a copy of the file from your site and make the necessary edits.

You can download your robots.txt file in several ways, for example:

- You can add a robots.txt file to your website by creating one on the computer and copying its contents into a new text file on the computer. Make sure you follow the instructions related to the file format when creating the new local file.

- Get a copy of your robots.txt file using the cURL tool.

- You can download your robots.txt file by using the Robots.txt Tester tool in Search Console.

Update your robots.txt file

To optimize your robot.txt file, open it in a text editor and make changes to the rules as necessary. Ensure that you are using the correct syntax and save the file with UTF-8 encoding.

Submit a robots.txt file to Google

Upload your robots.txt file to the root of your site as a plain-text file named robots.txt. Experts of leading Digital Marketing Agency in Mumbai will let you that the way you upload a file to your site is highly platform-dependent, or you can talk with your web host about how to do it and then follow their lead.Robots.txt is a technology that has matured and changed greatly since it was pioneered.

Most webmasters tend to look at the file and see pages on their sites that Google is ignoring. They then conclude that the file is not working when in fact this is often an issue with website structures or navigation, between the homepage and inner pages of a site. In many cases, these issues can be solved by simply cleaning up the navigation structure of the pages on their sites, with the help of Google Search Console and other features offered by Google Webmaster Tools.

Conclusion:

Robot txt files are a key part of the smooth functioning of your website. It is used to communicate with search engines about what should and should not be indexed, making it an essential tool for your website. Google Search Console is another fundamental tool important to building an impactful website. Read all about it in our in-depth blog on Google Search Console.

Thank you for sharing the information regarding how to create a robots.txt file. It helps build an impactful website, and I appreciate your efforts.

Thank you for your reply to our blog.

Thank you for such a well-written article on the robots.txt file. Thanks a lot for sharing. Keep blogging.

Thank you for your feedback on our blog. Please feel free to check out our latest blog on Page Speed and its effect on SEO in 2022.