Overview of Mixtral-8x7B: Significance in AI Technology Advancement

Mistral AI‘s most recent creation, Mixtral-8x7B, is an important moment in the ongoing development of AI technology. At the cutting edge of technology, Mixtral-8x7B is an extremely sparse mix of expert (SMoE) models that incorporate open weights, setting a new standard for AI capabilities. The model is accessible via Hugging Face and licensed to use under Apache 2.0, inviting developers and AI enthusiasts to look at the latest features of Mixtral-8x7B.

Significance in AI Technology Advancement

The importance of Mixtral-8x7B is in its capability to deliver speedier and better performance compared to models currently in use. As AI technology advances, efficiency is a major element of success, and Mixtral-8x7B is a pillar. The high-end performance of the product is proof of Mistral AI’s dedication to pushing the limits of what AI can do.

One notable feature of Mixtral-8x7B’s versatility is its architecture. The leading SEO company in Mumbai say with the capability to handle 32k tokens in a context, it supports a range of languages, including English, French, Italian, German, and Spanish. This ability to support multiple languages is essential in a world of globalization in which AI applications must meet the needs of different languages.

In its decoder-only, sparse mix-of-experts network, Mixtral-8x7B is more than only a leap forward regarding efficiency; it is also a smart move to manage parameters effectively while ensuring that latency is kept in check. This design innovation is especially useful when managing massive AI tasks with accuracy is essential.

What Is Mixtral-8x7B? Unveiling the Power and Potential

In the constantly evolving field of artificial intelligence, Mistral AI introduces Mixtral-8x7B, an innovative model that has redefined limitations for AI technology. This decoder-only sparse mix-of-experts (SMoE) model is testimony to Mistral AI’s dedication to improvement and efficiency, setting an entirely new standard for open-source models.

Licensing and Availability

Mixtral-8x7B is a free software under the Apache 2.0 license, highlighting Mistral AI’s commitment to encouraging collaboration and knowledge-sharing among developers. The open-source model’s nature encourages users, researchers, and developers to explore its complexities, thereby aiding in the development of AI capabilities.

The model is now available through Hugging Face. A top platform for sharing and exploring models, Mixtral-8x7B will be accessible to a large public. The experts from the leading SEO company in Mumbai think this release could be a smart decision from Mistral AI to promote the development of new ideas and experimentation, which will allow the world AI community to benefit from the full potential of this revolutionary model.

Architectural Features and Capabilities

Mixtral-8x7B’s structure is created with an eye on efficiency as well as versatility, which makes it stand out in the field of AI models.

Context Handling and Multilingual Support

Mixtral-8x7B– Context Handling and Multilingual Support

One of the outstanding characteristics of Mixtral-8x7B is its capability to deal with 32k tokens in a context. The extensive context-handling capability allows the model to comprehend and process larger amounts of data, making it ideal for jobs requiring a profound understanding of context. This is an essential improvement, particularly when nuanced understanding is essential.

Furthermore, Mixtral-8x7B offers powerful multilingual support that caters to people from various languages. English, French, Italian, German, and Spanish are among the languages that are supported and reflect the global outlook that Mistral has for AI. This multilingual capability is a step in acknowledging the many requirements for linguistics of AI applications in today’s highly interconnected world.

Sparse Mixture-of-Experts Network

The heart of Mixtral-8x7B’s design is the limited mix-of-experts-network. This design decision is crucial in achieving a balance of increasing parameters and reducing computational costs efficiently. The model’s small size enables better handling of complicated tasks and answers the constant problem of maintaining low latency for massive-scale AI operations.

The slender mixture-of-experts model allows for the more precise and targeted distribution of resources, enhancing the model’s overall performance. This is why Mixtral-8x7B can be described as an advanced technological model and a strategy-oriented solution that optimizes computational resources to achieve maximal efficiency.

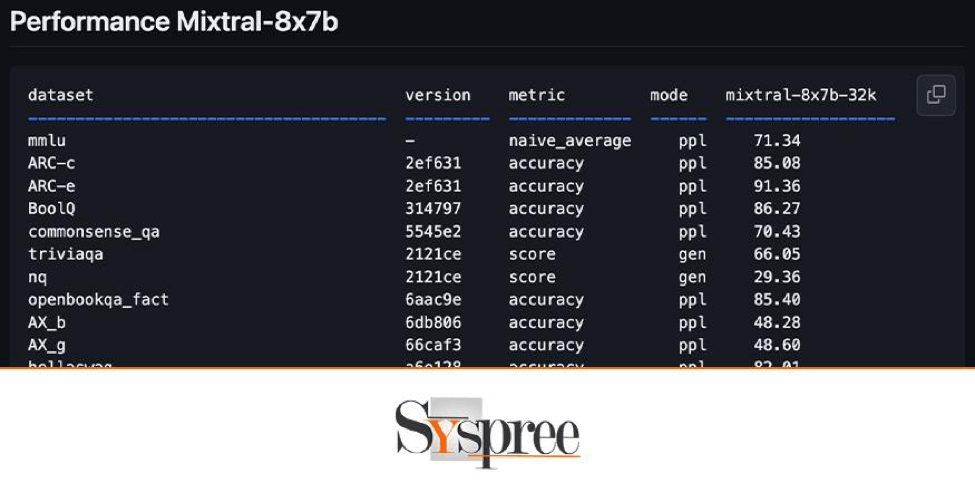

Mixtral-8x7B Performance Metrics

In the world of artificial intelligence, the performance of a model can be measured not only by its capabilities but also by how it compares to existing benchmarks. Mistral AI’s Mixtral-8x7B improves on the standard but sets a new benchmark by displaying exceptional performance metrics demonstrating its capabilities.

Benchmarking Against Existing Models

Outperforming Llama 2 70B

Mixtral-8x7B’s fame is derived by proving it can outperform the predecessor model, Llama 2. 70B. The benchmark’s success isn’t only a mathematical triumph but is a testimony to the model’s increased performance in processing and performance. Comparative analysis shows Mixtral-8x7B to be the better choice. It offers better performance and an increase of six times in the speed of inference, an essential aspect of the rapidly changing artificial intelligence.

Matching GPT3.5

In the vast orchestra composed of AI models, Mixtral-8x7B can’t just perform its role; it also plays the symphony that is GPT3.5. This is an incredible feat in light of the importance of GPT3.5 as a key player in the AI world. The best SEO company says that the model’s capacity to stand its ground against a powerful model such as GPT3.5 shows its strength and demonstrates that Mixtral-8x7B is not merely a rival but an imposing powerhouse on the scene.

Efficiency in Scaling Performances

Efficiency is the primary goal of Mixtral-8x7B’s model, as evidenced by its ability to scale performance seamlessly. It is a model that displays a striking balance between increasing parameters and efficient control of computing costs. This efficiency is a deliberate move to ensure that Mixtral-8x7B can meet the demands of complicated AI tasks, but it does so efficiently and with a high degree of precision.

Improvements in Reducing Hallucinations and Biases

Mixtral-8x7B – Improvements in Reducing Hallucination and Biases

Performance on TruthfulQA/BBQ/BOLD Benchmarks

The most distinctive feature of Mixtral-8x7B is its ability to minimize the effects of hallucinations and biases. This is an issue that is common in the field of generative AI models. The results of the model’s tests on TruthfulQA/BBQ/BOLD show its ability to generate responses more in line with the actual. This decrease in hallucinations improves the model’s reliability, making it a trusted tool for those who require accuracy.

Truthful Responses and Positive Sentiments

In the complex dance of creating genuine responses and blending positive emotions, Mixtral-8x7B is a standout. The model’s ability to maintain the accuracy of its responses while also generating positive emotions results in an enhanced and refined AI interaction. This double capability is especially important in cases where maintaining an enjoyable user experience is essential.

Proficiency in Multiple Languages

In the multilingual world that we live in, linguistic diversity is a major factor to take into consideration when developing AI models. Mixtral-8x7B is a model that embraces this issue with its ability to handle several languages. English, French, Italian, German, and Spanish are among the languages that can be supported due to Mixtral AI’s determination to create models that can transcend the boundaries of language. The experts from the best SEO company say that the multilingual capabilities enhance the model’s versatility, making it a valuable resource in an increasingly global AI environment.

Mixtral 8x7B Instruct and Its Optimization

The release of Mistral AI introduces the Mixtral-8x7B, specifically tailored to instruction follow-up; it increases the model’s adaptability. With an astonishing 8.30 on MT Bench, Mixtral 8x7B Instruct emerges as the top open-source model for this particular field. The improved performance of this model is a sign of Mistral AI’s determination to address specific needs, which further expands the scope of use of Mixtral-8x7B.

How To Try Mixtral-8x7B: A Guided Exploration Through 4 Demos

Mixtral-8x7B – A Guided Exploration Through 4 Demos

Exploring Mistral AI’s revolutionary Mixtral-8x7B requires several informative and engaging demonstrations. The demonstrations demonstrate the model’s power and offer users an in-person impression of the model’s performance in different scenarios.

Important Considerations for Users

Before attempting to use demonstrations, it’s essential to be aware of important points to consider. As with all generated AI content platforms, Mixtral-8x7B could result in inaccurate or unintentional outcomes. The leading digital marketing agency in Mumbai says that user feedback is crucial in improving and refining models. Therefore, discretion is recommended.

Demo 1: Perplexity Labs Playground

Trying Mixtral-8x7B

Perplexity Labs Playground offers an interactive experience that lets you try Mixtral-8x7B’s capabilities in the real world. It allows users to play with models, ask queries, and observe its generative capabilities in person. The experts from the digital marketing agency in Mumbai say that the user-friendly interface of the platform makes it accessible to both experienced developers as well as newbies.

Model Comparison with Llama 2 and Mistral-7b

Inside the Perplexity Labs Playground, users can examine Mixtral-8x7B against other notable models, such as Llama 2 and Mistral-7b. This comparison side-by-side allows users to assess the strengths and weaknesses of Mixtral-8x7B concerning its predecessors and provides useful insight into its performance metrics.

Demo 2: Poe Bot

Mixtral-8x7B-Chat bot Overview

Poe Bot, the platform that hosts various language models, such as Mixtral-8x7B, provides users with an interactive environment for exploring the capabilities of chatbots in the model. It allows users to ask questions or observe how Mixtral-8x7B reacts in a conversation context. This demonstration provides an engaging and conversational aspect to the study of this model.

Unofficial Implementation Disclaimer

It is important to know that Poe Bot declares its status as an “unofficial implementation” of Mixtral-8x7B. This declaration demonstrates the platform’s dedication to transparency and reassures users that even though the application is not officially licensed, it does provide the chance to engage with the model via chat in a setting.

While users traverse the demos, it’s best to approach the experience with a clear awareness of the curiosity of AI interactions. These demos are not just used as demonstrations of Mixtral-8x7B’s capabilities. They also function as a platform for users to be a part of the continual improvement of the model by providing valuable feedback.

User Feedback and Future Developments

In the ever-changing world of artificial intelligence, user feedback is a key factor in determining the direction of models such as Mixtral-8x7B. Mistral AI is aware of the importance of this partnership and is determined to leverage users’ insights to improve and further developments.

The Role of User Feedback

Feedback from users serves as a guide for designers to improve the efficiency of AI models. In Mixtral-8x7B, Mistral AI allows users to submit their experiences, comments, observations, and ideas. This feedback loop can be instrumental in identifying areas that could be improved, addressing biases that could be causing problems, and adjusting the model’s responses to better align with the user’s expectations.

The many perspectives and diverse interactions that users experience in Mixtral-8x7B help to develop an in-depth knowledge of its strengths and weaknesses. Mistral AI appreciates this input variety and recognizes that users’ collective intelligence plays an essential role in the evolution of AI models.

Mistral AI’s Commitment to Improvement

Mixtral-8x7B – Mistral AI’s Commitment to Improvement

Mistral AI is always persistent in its commitment to continual improvements. Feedback from users guides developers in improving the efficiency of AI models. The model development process permits Mistral AI to implement updates to address issues identified and ensure Mixtral-8x7B is constantly evolving to meet the ever-changing requirements of its users.

The dedication to improving goes beyond solving immediate problems to taking on long-term improvements. Mistral AI considers Mixtral-8x7B to be more than only a model; it is an evolving entity that can adapt to new trends, technological changes, and the expectations of users. This proactive approach ensures the model stays relevant and efficient in the constantly changing AI environment.

Early Access and Integration Opportunities

Mistral AI provides early access to Mixtral-8x7B, allowing developers and businesses to integrate this advanced model into their systems and applications. Early access facilitates real-world testing, allowing users to explore the model’s suitability for diverse use cases. This collaborative approach positions Mistral AI as a partner in innovation, working hand-in-hand with users to uncover the full potential of Mixtral-8x7B.

Integration possibilities extend beyond personal exploration to more extensive applications in sectors like content generation, natural language processing, etc. Mistral AI invites businesses to consider how Mixtral-8x7B might seamlessly integrate into their workflows, resulting in improved productivity and creative possibilities.

In the journey of collaboration between the users and Mistral AI, the future advancements of Mixtral-8x7B do not have to be confined to a single path. Still, they are guided by the common goals and perspectives of users and the AI community. Mistral AI encourages users to participate actively in the ongoing story, which will contribute to the expansion and development of Mixtral-8x7B as it becomes an industry-standard in artificial intelligence (AI) models.

Conclusion

Mixtral-8x7B by Mistral AI marks a significant advancement in AI technology that offers unbeatable performance and versatility. With a 32k token capacity for context, multilingual support, and Apache 2.0 licensing, it is a reference in the AI world. The model’s limited mix-of-experts network optimizes computation costs and makes it a viable solution. Examine the capabilities of Hugging Face and help improve its model through feedback from users. If you like this check out our previous blog Will Google Gemini Outperform ChatGPT?