As a web developer, you’re in charge of both the design and the development of your client’s website. You want to make sure that each element is coded and displayed correctly. But when it comes to writing code, there are many things you need to consider to get the best SEO results. When building your WordPress theme or working on a Magento template, remember that certain coding protocols and styles will help your site appear higher in search engine results.

Remember that every great SEO campaign in the world has a great website developer behind it. This doesn’t necessarily mean they’re good at programming, but they know how to get the job done. Anyone can follow a set of guidelines and receive some positive results, but that won’t help you stay on top for long. The key to success is implementing as many best practices as possible into your development practices. These 12 simple rules every web developer should follow will help you do just that.

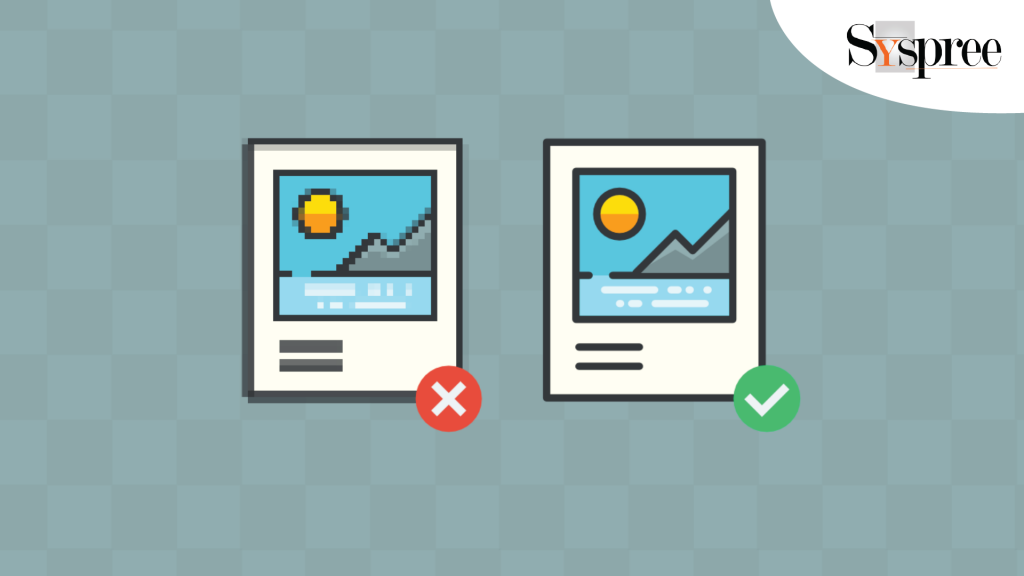

Optimize your images

Images are an important part of a website. They can add visual interest to a page and help break up long blocks of text. However, images shouldn’t be used just for using them. Using images that are relevant to the content will help with SEO.

Optimize your images

Keywords should be used in the alt attribute of an img tag. As website development company would say that this is especially true if the image is being used as a link. For example, “click here” written out as text inside an anchor tag will be valuable for accessibility and SEO benefits (because it’s a keyword). The same thing holds if you use an image to create a link; its alt text should contain keywords specific to the page you’re linking to.

Before uploading images, they should be compressed, so they don’t impact load times and don’t take up too much space on your server. You can use online tools like TinyPNG or JPEG Mini for this purpose. Several plugins can help you compress your images upon upload if you’re using WordPress.

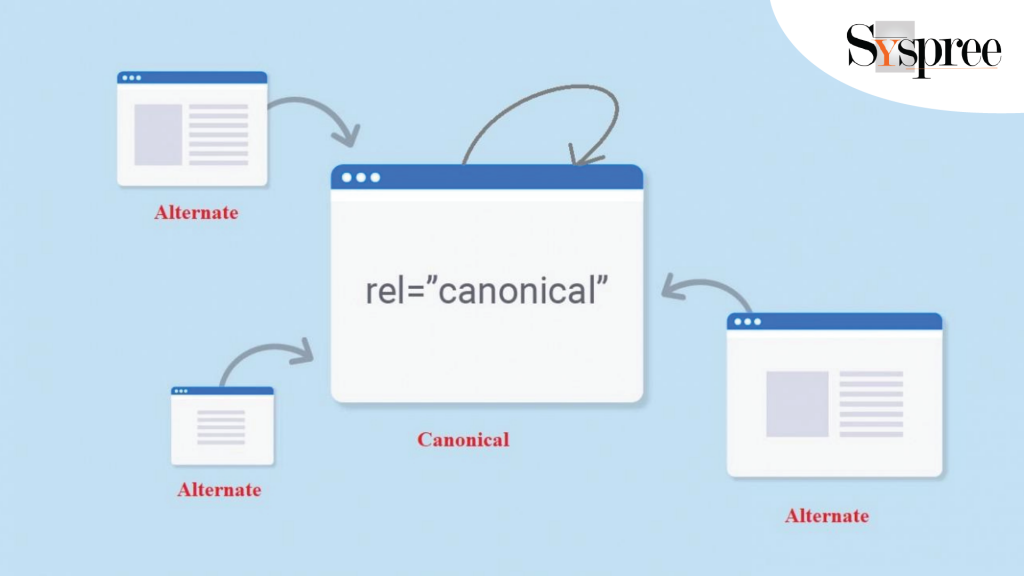

Optimize your canonical tags

A canonical tag (aka “rel canonical”) tells search engines that a specific URL represents the master copy of a page. The canonical tag declares a preferred version of a page—it prevents problems caused by identical or “duplicate” content appearing on multiple URLs. The canonical tag tells search engines which of your multiple URLs they should use when including a URL in a search result.

Optimize your canonical tags

If your site has identical or vastly similar content accessible through multiple URLs, this can cause problems for search engines trying to determine which content is more important.

The canonical tag also helps if you have pages with similar content but different URLs. For example, an ecommerce site may have an original item listing URL, as well as these variations:

- http://www.example.com/product-item/unique-name

- http://www.example.com/product-item/unique-name?trackingid=87654321

- http://www.example.com/product-item/?trackingid=87654321&source=facebook

A web development company would tell you that to avoid duplicate content issues, use canonical tags to tell search engines which URL is the original, directing users to a one-page version that features a clear, concise description of the content.

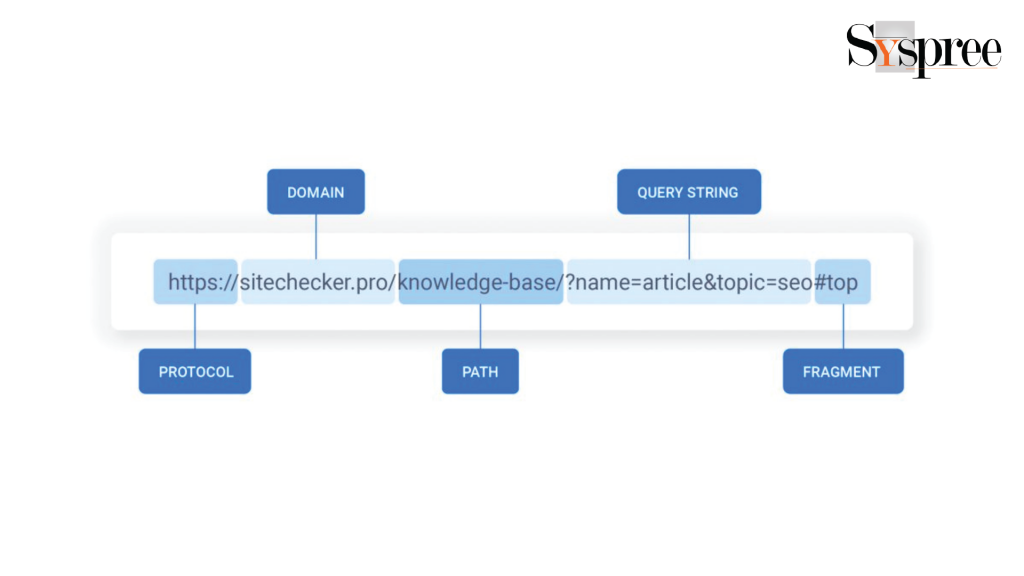

Choose the best url structure.

There are two main options for url structure:

– Using dynamic parameters, like mywebsite.com?id=1

– Using one of several types of clean urls, such as mywebsite.com/news/1

Choose the best url structure

The first option is technically easier to implement, but it isn’t good for your website’s search engine ranking and does not give anything to your users. If a user wants to send a link to that page to a friend, which one is easier: www.mywebsite.com/news/1 or www.mywebsite.com?id=1?

On top of that, Google and other search engines will rank your pages lower if you use dynamic urls because they can’t understand them — they cannot find out what is contained on that page. As a result, if you want to build a successful website, you should always use clean urls and make sure that they contain the relevant keywords describing their content.

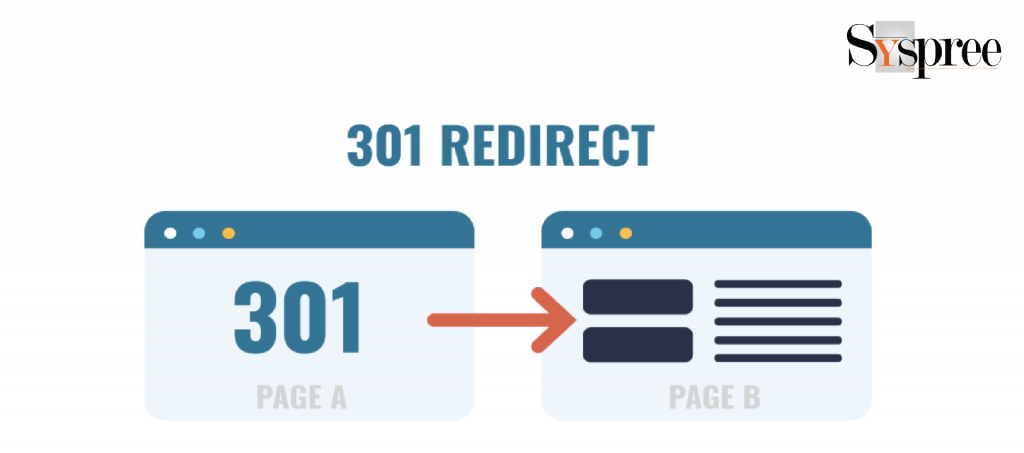

Use 301 redirects properly for SEO

Use 301 redirects properly for SEO. If you’re changing domains or URLs around, use a 301 redirect. This is a permanent redirect from one URL to another, and it will also transfer your link juice (ranking power) to the new page/site. You can check your redirects with this tool.

Use 301 redirects properly for SEO

A website developer will tell you that a 302 redirect is a temporary redirect, so it does not transfer ranking power and is mainly used for maintenance purposes. If a page or site goes down, the 302 tells search engines that it will be back soon and not to remove them from their index.

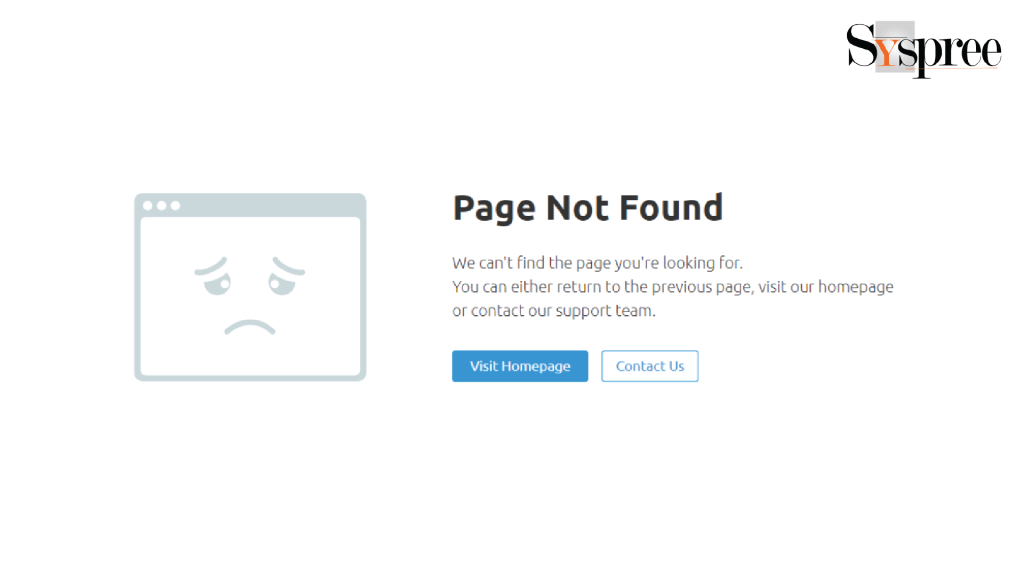

Check your 404 error pages. When users get a 404 error, they know something’s up, but they might not know how to get back where they were going. Having a well-designed 404 page that lets them back into your site content is important for usability and preserving traffic. According to Moz, 404 errors are the third most common landing page for organic traffic after the homepage and product pages.

Ensure you have an XML sitemap for SEO and ensure all your pages are indexed in Google Search Console. Search engines love sitemaps because they help them crawl your website easier.

Fix 404 error pages

SEO (search engine optimization) is a competitive strategy that aims to create a competitive advantage by improving the website’s visibility in search engines’ results pages.

Fix 404 error pages

To do that, web developers need to ensure a high level of quality and content, and then they have to ensure that search engines index all the pages on their website.

Use SSL certificate for SEO and Security.

If you are selling products or services on an eCommerce website, it is imperative that you have a Secure Socket Layer (SSL) certificate installed. Without it, you could be penalized by Google for security issues and will be unable to accept payment on your site.

Use SSL certificate for SEO and Security

To set up the SSL certificate, log into your cPanel and select “Let’s Encrypt.” All of your pages will then be automatically redirected through HTTPS so that all data is encrypted before being sent across the web. Ensure that the padlock icon appears in your browser’s address bar when accessing your website so that visitors know they can enter their payment details safely.

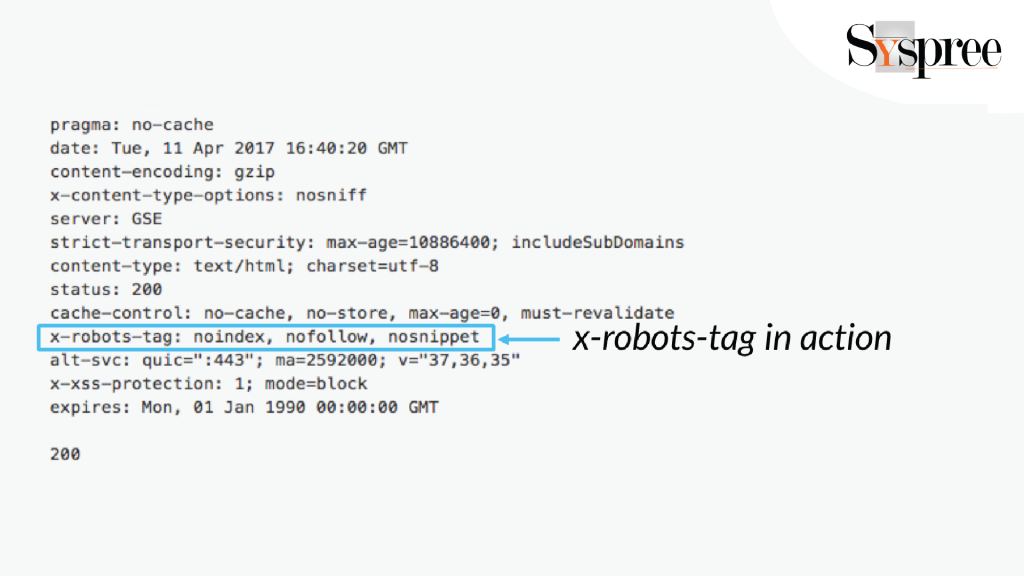

Meta robots directives for SEO purposes

Meta robots directives are instructions to search engine crawlers that determine how they should crawl your website. If you can use it well, it’s an excellent tool.

Meta robots directives for SEO purposes

Meta tags are located in the head section of your HTML document. They will not be visible to your website visitors, but they are very important if you want to provide search engines with information about your page content.

Here are some examples:

- Noindex: You don’t want the page indexed, so add “noindex” to the meta tag.

- Nofollow: You don’t want the links on the page followed, so you add “nofollow” to the meta tag.

- Noarchive: You don’t want a cached copy of this page in Google’s index, so add “archive” to the meta tag.

- Nosnippet: You don’t want a description shown with the search result, so add “nosnippet” to the meta tag.

Meta robots crawl and index your web pages by search engines. They are also used to indicate whether or not search engine bots can follow a page.

There are four meta robots directives that you should be familiar with:

Index/noindex – This directive controls whether or not the search engine will index your page. The index option lets the engine know that it is okay to index your page (this is the default action for all pages). The noindex option tells the engine that it should not include this in its index. You can use this option for development pages or for any other content that you do not want to be associated with your website in any way, shape, or form.

Follow/nofollow – This directive controls whether or not your website’s robots will follow any links on this page. The follow option lets the robot know that it should follow any links on this page (this is the default). The nofollow option tells the robot to ignore all of the links on this page. You can use this option on pages where you are testing code and do not want the robot crawling through all of your unfinished work.

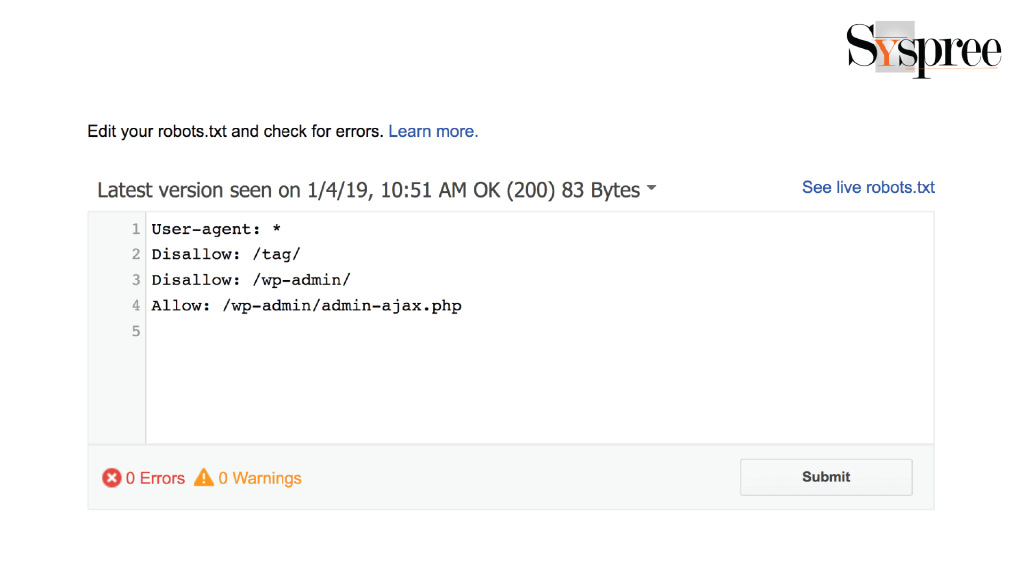

Using Robots.txt File

Robots.txt is a file that can tell search engines like Google which pages or files the crawler can or cannot request from your site. You can use robots.txt to block access to certain folders, such as your images folder, or individual files, such as PDF documents.

Using Robots.txt File

Robots.txt also allows you to place limitations on specific user agents (search engine bots). This is important if you have a page that has been blocked for one reason or another. You don’t want it showing up in the search results.

You should create a robots.txt file before submitting your website to Google Search Console and Bing Webmaster Tools, as there are rules that need to be followed when doing so.

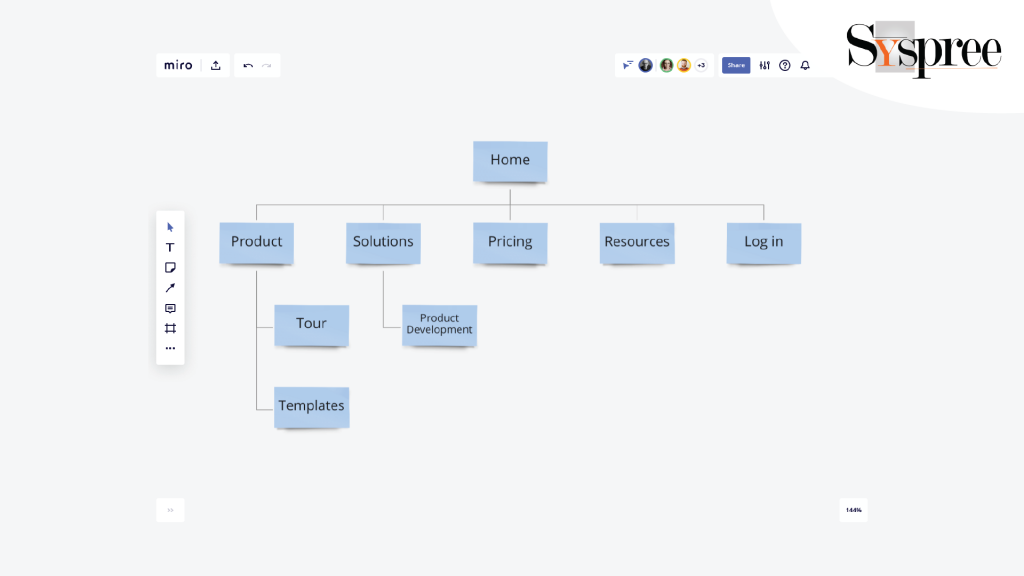

Create a Robust, Informative Sitemap

The best web developers know how to create a sitemap that meets the needs of both users and search engines. A sitemap is like a directory for your website, showing visitors and search engine crawlers where everything is.

Create a Robust, Informative Sitemap

A good sitemap should be easy for visitors to use and include all the relevant metadata for search engines to index your pages easily and accurately.

It’s important not to confuse a sitemap with a robots.txt file. A robots.txt file gives instructions to search engine crawlers about which pages or files on your site they’re allowed to access. A sitemap is an XML file that lists the URLs for a site and lets Google know when pages were last updated.

Remember that setting up a sitemap is one of the first things you should do when building a new website. Sitemaps are useful for several reasons:

Sitemaps make it easier for search engine crawlers to find and index your pages. They also help users navigate your site easily.

Sitemaps can be submitted to Google Search Console, Bing Webmaster Tools, and other major search engines. This ensures that your pages are crawled by bots with the frequency you desire.

Sitemaps make tracking your most important pages easier and monitor how often bots crawl them. If you discover that a page isn’t being crawled as frequently as you’d like, you can increase its crawl rate using Google Search Console.

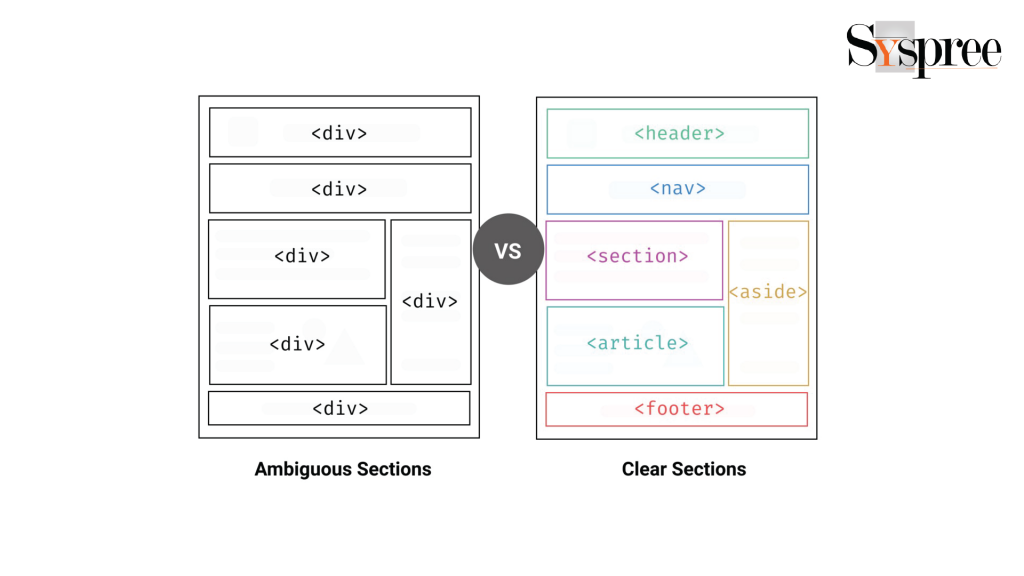

Use semantic markup

Semantic markup means using the correct HTML tag for the correct element. For example, if you want to display a paragraph, use the <p> tag and not a <div> tag. Similarly, if you want to display a heading, use the <h1> tag or one of the other six heading tags instead of styling another element with bold or large font size.

Use semantic markup

It would be best if you used semantic markup because non-semantic markup cannot be interpreted by search engines and screen readers as easily as semantic markup can. And because these search engines and screen readers are becoming sophisticated enough to interpret text better, it will help them rank your page better if they understand what’s going on with your page.

Fix broken links, if any.

Have you ever clicked on a link, only to be met with a 404 error message? How many times did you click on another link on that site? If you were annoyed by the broken link, you didn’t stick around for long. You might have even left the site and gone somewhere else.

Fix broken links, if any.

The same thing will happen to your visitors if you have broken links on your website. If they can’t find what they’re looking for, they’ll leave. They won’t be back again. And they certainly won’t recommend your website to friends and family.

This is why it’s important to fix broken links as soon as possible!

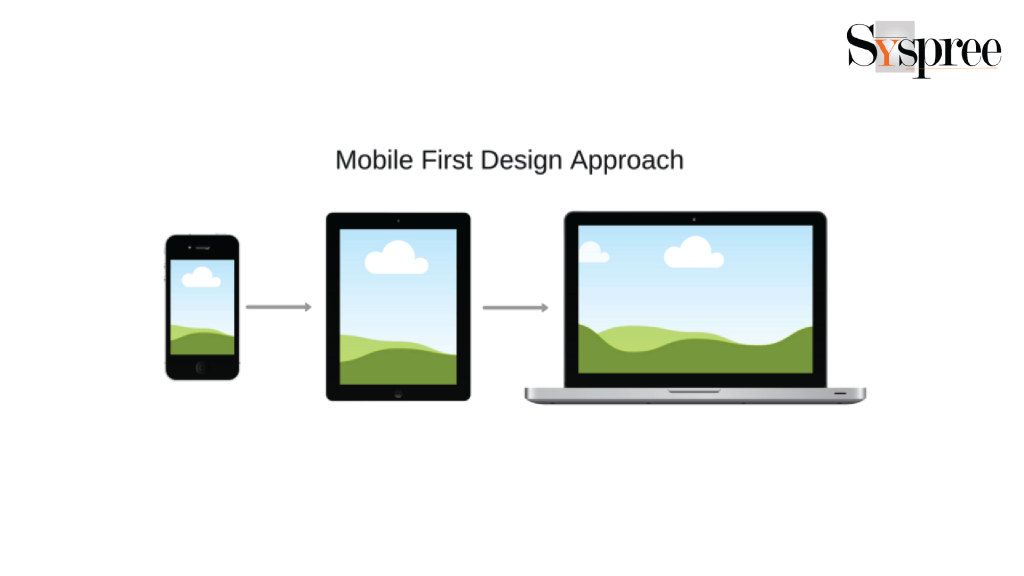

Have a mobile-first approach

The first thing that comes to mind is the need for a mobile-friendly design. There’s been significant buzz about how Google favours mobile-friendly websites in their rankings for over a year now. This isn’t just hype — it’s a real thing. The “Mobilegeddon” update Google did in April 2015 was intended to improve the search experience for mobile users by ranking sites with responsive designs higher than sites without them.

Have a mobile-first approach

The good news is that, even if you don’t have a responsive design now, there’s still time to make the switch. You aren’t going to get penalized — at least not yet. But the longer you wait, the more likely your competitors will catch on and steal away your traffic while they’re at it.

Having a mobile-friendly website is important because of Google’s rankings and because of your customer’s expectations. Mobile devices are quickly overtaking desktops as the primary way people surf the web and shop online. If your site isn’t optimized for mobile, you will lose business to your more prepared competitors.

Beyond mobile-friendliness, there are several best practices every developer should follow to make sure search engines like Google and Bing can properly index their web applications.

Conclusion

Web developers have a lot on their plate when it comes to SEO. We’re sharing twelve of the most important SEO best practices that you can use today to improve your site’s SEO and user experience. Implementing these guidelines will help you rank better on Google and increase search user engagement with your content.

We’ll share everything from simple tricks like optimizing your images for search engines to more advanced tips like fixing 404 error pages and utilizing SSL certificates for optimal search engine results. Try to implement as many of them as possible; as much web traffic depends on organic search. If you want to learn about CSS Image Sprint, we have created a blog on CSS Image Sprites: the easy way to use images, web development and SEO.

Thank You SySpree for sharing ths blog. It will help other web development company to understand about rules of web developers for Best SEO Result.

Thank you for your comment on our blog, Shubham.

Thank you for sharing this blog on 13 Simple Rules Every Web Developer Should Follow For Best SEO Results. It helped me understand how to choose the best URL structure, fix 404 error pages, and the importance of an SSL certificate for SEO and Security. I must say SySpree is doing a great job as one of the leading SEO companies in Mumbai.

Hi Bhavika, thank you for your comment on our blog. We are glad you liked it.

Thank you for sharing the information about the 12 simple rules every web developer should follow for the best SEO results. It can help web developers and many other web development companies in Mumbai. Appreciate your efforts.

Hi Vaishali, we are glad you found this blog to be helpful. Do check out our latest SEO related blog: A 2022 Detailed Guide on Resource Page Link Building.

This article explained how web developers should follow specific rules for SEO results in simple words, like optimizing images, choosing the URL structure to fix broken links, and having a mobile-first approach. Thank you for your efforts.

Hi Aniket, we are glad you found this blog to be helpful. Check out our latest blog: A 2022 Detailed Guide on Resource Page Link Building.

Very nice blog very informative article its very useful for us. Thanks for sharing

Hello, thank you for your comment on our blog. Check out our latest blog: Keyword Analysis: What it is, Why is it important, How to do and 7 keyword research tools

Thanks for sharing such type of knowledge Article, You have Excellent knowledge of Custom Web Development. After reading your blog I got so much information. Thanks Again, I will share this with my friends.

Glad to know you found our blog useful